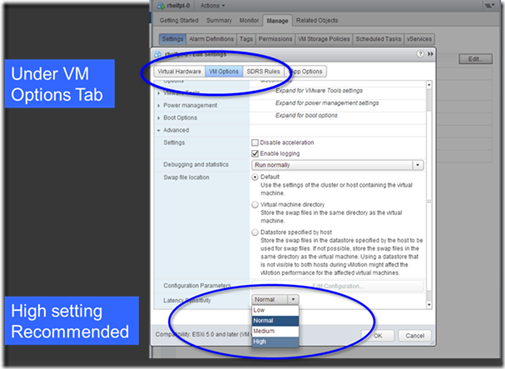

Today at VMworld US vSphere 5.5 was announced in the keynote and one of the new features released with vSphere 5.5 is the Latency-Sensitivity Feature. The latency-sensitivity feature is applied per VM, and thus a vSphere host can run a mix of normal VMs and VMs with this feature enabled. To enable the latency sensitivity for a given VM from the UI, access the Advanced settings from the VM Options tab in the VM’s Edit Settings pop-up window and select high for the Latency Sensitivity option as shown below:

What Latency-Sensitivity Feature Does

With the latency-sensitivity feature enabled, the CPU scheduler determines whether exclusive access to PCPUs can be given or not considering various factors including whether PCPUs are over-subscribed or not. Reserving 100% of VCPU time increases the chances of getting exclusive PCPU access for the VM. With exclusive PCPU access given, each VCPU entirely owns a specific PCPU and no other VCPUs are allowed to run on it. This achieves nearly zero ready time, improving response time and jitter under CPU contention. Although just reserving 100% of CPU time (without the latency-sensitivity enabled) can yield a similar effect in a relatively large time scale, the VM may still has to wait in a short time span, possibly adding jitter. Note that the LLC is still shared with other VMs residing on the same socket even with given exclusive PCPU access.

The latency-sensitivity feature requires the user to reserve the VM’s memory to ensure that the memory size requested by the VM is always available. Without memory reservation, vSphere may reclaim memory from the VM, when the host free memory gets scarce. Some memory reclamation techniques such as ballooning and hypervisor swapping may significantly downgrade VM performance, when the VM accesses the memory region that has been swapped out to the disk. Memory reservation prevents such performance degradation from happening.

Bypassing Virtualization Layers:

Once exclusive access to PCPUs is obtained, the feature allows the VCPUs to bypass the VMkernel’s CPU scheduling layer and directly halt in the VMM, since there are no other contexts that need to be scheduled. That way, the cost of running the CPU scheduler code and the cost of switching between the VMkernel and VMM are avoided, leading to much faster VCPU halt/wake-up operations. VCPUs still experience switches between the direct guest code execution and the VMM but this operation is relatively cheap with the hardware-assisted visualization technologies provided by recent CPU architectures.

Tuning Virtualization Layers:

When the VMXNET3 para-virtualized device is used for VNICs in the VM, VNIC Interrupt coalescing and LRO support for the VNICs are automatically disabled to reduce response time and its jitter. Although such tunings can help improve performance, they may have a negative side effect in certain scenarios. If hardware supports SR-IOV and the VM doesn’t need a certain virtualization features such as vMotion, NetIOC, and FaultTolerance, we recommend the use of a pass-through mechanism, Single-root I/O virtualization (SR-IOV), for the latency sensitive feature.

Pingback: VMworld 2013 – Link collection | BasRaayman's technical diatribe

Pingback: La mia esperienza al VMworld US 2013 – Luca Donetti Dontin | Blog

Pingback: La mia esperienza al VMworld US 2013

Pingback: My experience at VMworld US 2013

Pingback: Welcome to vSphere-land! » vSphere 5.5 Link-O-Rama

Pingback: VMware Link Collection | Life